Looking for failures in a system requires curiosity, professional pessimism, a critical eye, attention to detail, good communication with development peers, and experience on which to base error guessing.” This sentence from the CTFL syllabus would also scare you away? It sounds so depressing. Professional pessimism. Who wants that? I guess testers only. They can cope. And find their satisfaction just knowing that it serves a good purpose.

But then, there are situations, where you have to involve people into your team, who are not testers by heart. As Georg Hauptfrom OOSE described in the talk he gave at the last StugHH event, there are situations, where people from helpdesks or other non test-trained areas – like end users of large systems, would be of large benefit. Let us call these helpful people “business testers”.

Based on a testing survey in 2015 by HS Bremen, HS Bremerhaven, TH Köln, about 81% of the companies apply use case based testcase creation methods in order to get a good test coverage. It only sounds reasonable to then also involve business testers.

But – we want to automate tests! So, on top of trying to teach/implant the end users some testing gene, we also have to teach them to automate tests? NO WAY.

To the Rescue

Luckily, there are some old and actually well-known approaches to shield the business testers from technology. MicroFocus ALM (better known as HP ALM or even Quality Center) has its “Business Process Testing”, where basically, using keywords, reusable, parametrizable script snippets (to be coded in UFT) can be called, even by a person not knowing the processes behind.

Tricentis with TOSCA has something they call “Model-based Test automation”. I have not yet worked with TOSCA, but it the approach is described as “Model-based test automation (that) decouples the test cases from the system’s underlying technology. You can think of TOSCA’s automation model as a reverse-engineered interface catalogue of the system-under-test, in which modules (components of the automation model) are proxies of the interfaces.” Another benefit is described as “One major feature where this tool is gaining leverage over other automation tools is due to its model based technique where a model of AUT (application under test) is created instead of scripts for test automation. All the technical details about AUT, test script logic and test data are separately saved and merged together at the time of test case execution. The central model gets updated the moment any change is encountered in elements of the application.” So the major difference seems to be that for TOSCA you do not have to code the stuff behind the scenes.

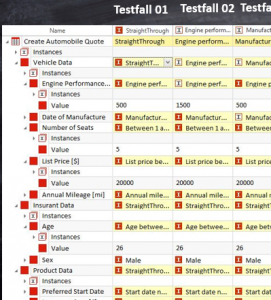

Anyways, before I get into product discussions here: The main benefit for both variants is that in the end, the test case looks to the end user almost like an excel sheet, where he only has to tell per field per block, which value to enter. Such an entirely data driven approach can really help to gap fear about testing. Business testers love Excel!

In my current project I have quite a few of those business testers. While they still learn testing, they will also support automation. We do not use any of the above products, but something specific to the product being implemented after some customization. In the end, here we also will be able to use excel sheets to have a data driven approach to create test cases. Depending on the modularization concept chosen (the span of the functions in ALM business testing, the scope of the model in TOSCA, the length of a script in my project) this already now proves to be very helpful.

Practical Stuff

As this is a work in progress here a list of observations we made up to now – which I think will be the same for any of the tools:

| Good practices | Possible Pain Points |

|

|

Depending on the tool chosen, the exposure of the test scripts to the business tester is very different. While Micro Focus suggests to have the scripts written by a test programmer and hidden to the end user, Tricentis with Tosca says, that most code is automatically scanned and there is no need to see it. In my project we also have a simple scripting language that is definitely human readable. Really complex behaviors cannot be scripted with this, nor loops. The first problem can be resolved by subprograms that then can be used in the scripts. The idea is to train the business users at least to create the test data to run the script but maybe also to update smaller bits inside the scripts. We believe that they can fix smaller issues themselves. However, the overall setup is being done by a test automation engineer.

First experiences show that the business testers do appreciate to supply test data in a format that very much looks like a list of fields on the screens they use for testing. But we are not into the really difficult testing tasks yet. So, we are still collecting experiences – and any comments are really welcome!

Hi Testhexe,

thanks for this very good article.

I love this: “..Professional pessimism. Who wants that? “

I’ve worked with ALM/BPT & UFT for the past 9 years. The approach is to build “components” that represent a page/window, or subsection of it, with its objects (ones that will be interacted with) mapped for it. These BPT components are then pulled together to drive and execute the test itself. These components include the data and keywords combined to perform the work. Underneath the component layer is a technology/keyword layer that processes the data & keywords. Below that is all the utility/support functions layer that do the rest of the work.

The Business Testers are taught how to use the BPT components along with the keyword DSL (Domain Specific Language) to construct their “automated” tests. The Automation Developers build and maintain the components and lower layers. It works very well in that a small team of Automation Developers are building and maintaining the automation infrastructure while a larger group of testers are building out the tests themselves. It leverages economies of scale.

Now is this all “Codeless/Scriptless”? No, just different layers of abstraction. And that is what is really going on with these tools that tout the codeless/scriptless “technology”. But these tools do allow smart automation developers to build out tools for non-technical testers (business oriented) to use and to help with the automation effort.

I have seen TOSCA in the past, not used it, but it does allow you to create a “model” of your system and its logic. From this you can have it produce scenarios and data from the model. This can then be automated, and eventually run. That is very powerful. As you build more of the model the process of building tests becomes more robust.